Overview

Have you ever been overwhelmed by very long blog posts when all you need are the key takeaways? Instead of sifting through endless paragraphs, we can take the help of an AI-based text summarizer that can condense lengthy contents into bite-sized insights, saving our precious time.

In this blog post, we will learn how to make a simple yet powerful text summarizer using LangChain, Streamlit, and LLM models. We will fetch the web content, process it with an advanced LLM, and generate concise summaries—all within a few lines of code.

Let us begin the exciting journey.

Introduction

In our fast-paced and information-rich world, time is invaluable. Individuals are inundated with long-form content in the form of blog posts, articles, and reports, each teeming with insights and knowledge. Let’s be honest: who truly has the time to read through all those paragraphs to come up with key takeaways?

This is where the AI-powered text summarizer comes to the rescue. Instead of spending countless hours reading texts, AI-powered text summarizer tools can help us extract the key points in a matter of minutes. It is like an assistant is reading content on our behalf, highlighting only the most important part.

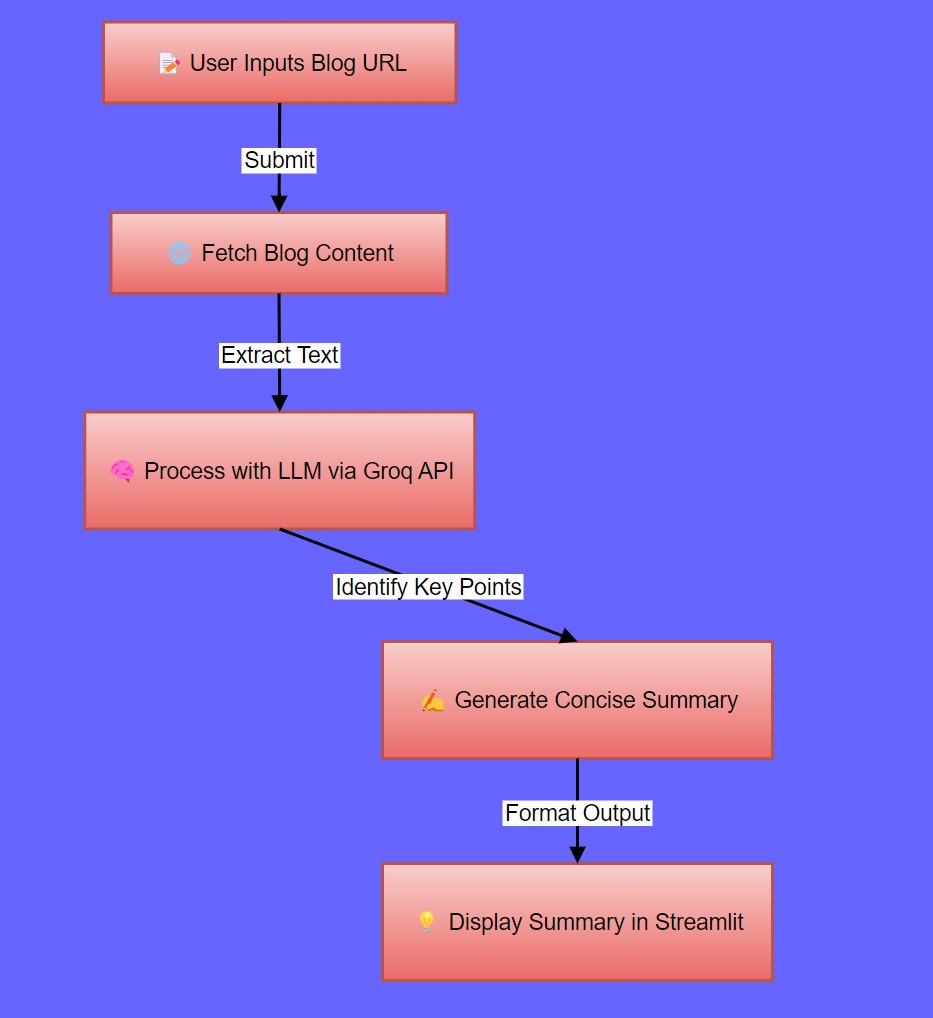

In this tutorial, we will develop our own text summarizer tool. The following are the steps:

Fetch content from a blog URL

Simply provide the link, and the tool will grab the content for you.

Process the content using a Large Language Model (LLM)

The LLM model will be used to analyze the text and identify the most important points.

Generate a concise summary

Find the key takeaways in a clear and digestible format.

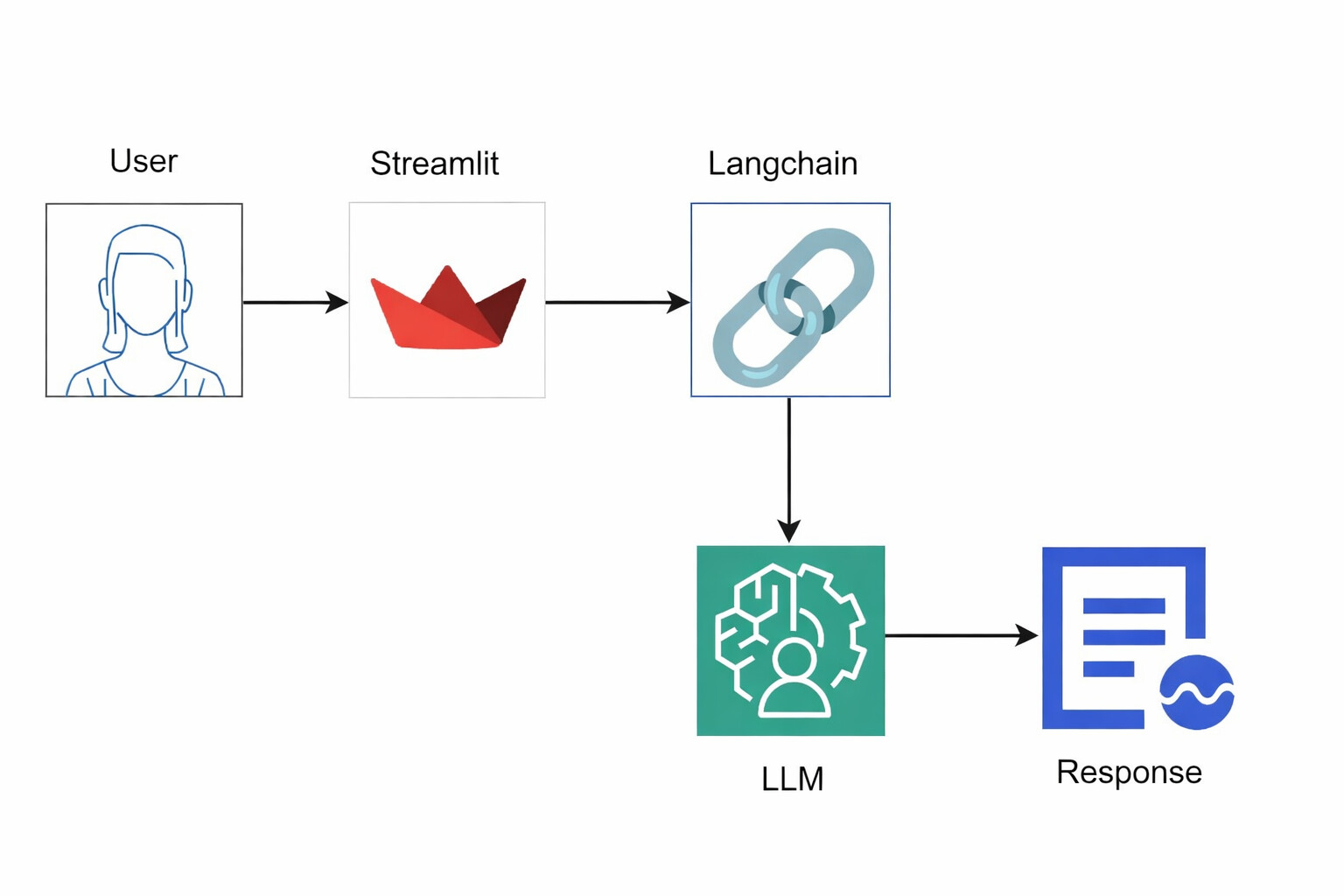

In this project, we will use LangChain to handle the summarization logic, Streamlit to create a clean and user-friendly interface, and an LLM (via the Groq API) to power the summarization. The flowchart below (Figure 1) gives an overview of how the text summarizer works.

Figure 1: Flowchart showing how to build a text summarizer using an LLM and Streamlit

Tools To Develop Text Summarizer App

We will be using the following tools to create a text summarizer tool:

LangChain: The Framework for Chaining LLMs and Data Sources

Langchain is a powerful framework for building applications by connecting large language models (LLMs) with various data sources. This tool makes it easier to handle tasks like summarization, question answering, and extracting important information by removing the complexity of building workflows.

In this article, we will show how to develop a text summarizer using Langchain streamlit and process blog content effortlessly.

Streamlit: Building Interactive Front-End Applications with Ease

Streamlit is an open-source framework that builds interactive and user-friendly web applications using Python. By using Streamlit, we can build web interfaces really fast and with very little code.

In this project, we will use Streamlit to build a simple, clean, and intuitive front end where the users can easily input the URL of a blog post and receive a summarized version in return.

LLM with Groq API

We will be using an LLM powered by the Groq API to perform the text summarization. The Groq API provides an efficient way to access these models. For this project, we will be using the Gemma2-9b-It LLM model.

Practical Implementation

Following are the step by step guide to develop a text summarizer tool.

Import Dependencies

We will first import the dependencies for this project.

import streamlit as st

from langchain.prompts import PromptTemplate

from langchain_groq import ChatGroq

from langchain.chains.summarize import load_summarize_chain

from langchain_community.document_loaders import UnstructuredURLLoader

from langchain.schema import Document

import validatorsExplanation

-

streamlitis imported for creating the web app interface. -

PromptTemplatefromlangchain.promptshelps define prompt formats for summarization. -

ChatGroqis the model used for the summarization task. -

load_summarize_chainis used to load the chain of operations that performs summarization. -

UnstructuredURLLoaderloads content from a given URL. -

Documentis used to represent the document content. -

validatorsis used to validate the provided URL.

Streamlit App Configuration

st.set_page_config(page_title="LangChain: Summarize Blog Content", page_icon="📝")

st.title("📝 LangChain: Summarize Blog Content")

st.subheader("Summarize Website Content")Explanation

-

Sets the page title and icon for the Streamlit app.

-

Displays the main title and a subheader on the app.

Initialize Session State Variables

if "llm" not in st.session_state:

st.session_state.llm = None

if "api_key_valid" not in st.session_state:

st.session_state.api_key_valid = FalseExplanation

Initializes session variables to store the language model (llm) and API key validation status (api_key_valid)

Sidebar Input for API Key

Here, we are creating a button in the sidebar to input the Groq API key. By this, we will be able to access the LLM models and utilize them for generating summaries efficiently.

with st.sidebar:

groq_api_key = st.text_input("Groq API Key", value="", type="password")

if st.button("Enter"):

if groq_api_key.strip():

try:

# Initialize the model with the provided API key

st.session_state.llm = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key)

st.session_state.api_key_valid = True

st.success("✅ API Key validated successfully!")

except Exception as e:

st.session_state.api_key_valid = False

st.error(f"Error initializing ChatGroq: {e}")

else:

st.warning("Please enter the Groq API Key.")Explanation

-

A sidebar is created for the user to input their Groq API Key.

-

Upon entering the key and clicking “Enter”, the model (

Gemma2-9b-It) is initialized with the provided API key. If successful, the API key is validated, and a success message is shown. -

If the key is invalid or empty, appropriate warnings or error messages are shown.

Define The Prompt Template

prompt_template = """

Provide a summary of the following blog content in 300 words:

Context:{text}

"""

prompt = PromptTemplate(template=prompt_template, input_variables=["text"])Explanation

-

A prompt template is defined for summarizing blog content.

-

The

{text}placeholder in the prompt will be replaced with the actual blog content.

Input for Blog URL

blog_url = st.text_input("Blog URL", label_visibility="collapsed")Explanation

A text input field is created for the user to enter the URL of the blog they want to summarize.

Button For Text Summarization

if st.button("Summarize Blog Content from URL"):

if not st.session_state.api_key_valid:

st.error("API Key not validated. Please enter a valid API key and click 'Enter' first.")

elif not blog_url.strip():

st.error("Please provide a blog URL.")

elif not validators.url(blog_url):

st.error("Please enter a valid URL.")

else:

try:

with st.spinner("Fetching and summarizing content..."):

# Load the blog content from the provided URL

loader = UnstructuredURLLoader(

urls=[blog_url],

ssl_verify=False,

headers={

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 13_5_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36"

},

)

text = loader.load()[0].page_content

# Create a document object for summarization

document = Document(page_content=text)

# Chain for summarization using the defined prompt template

chain = load_summarize_chain(st.session_state.llm, chain_type="stuff", prompt=prompt)

result = chain.invoke({"input_documents": [document]})

output_summary = result.get("output_text", "No summary available.")

st.success(output_summary)

except Exception as e:

st.exception(f"Exception: {e}")Explanation

-

Button Click: Triggers text summarizer when clicked.

-

Validations: Checks if the API key is validated. Ensures a blog URL is provided and correctly formatted.

-

Fetching Content: Uses

UnstructuredURLLoaderto extract text from the given URL. Disables SSL verification and includes a custom User-Agent. -

Summarization Process: Wraps content in a

Documentobject. Usesload_summarize_chainwith the Groq model and prompt. Retrieves and displays the generated summary. -

Error Handling: Catches and displays any exceptions.

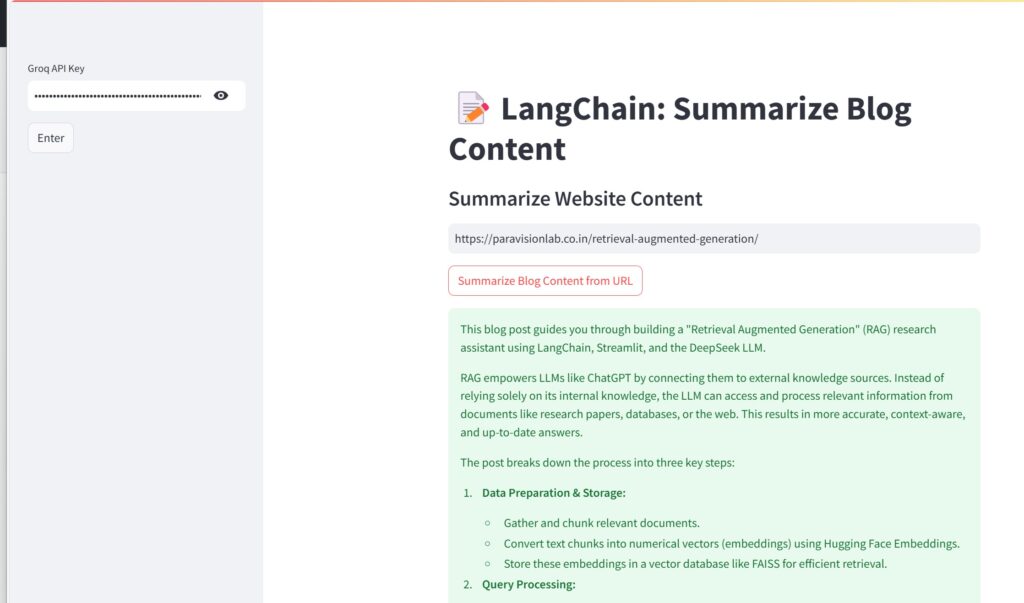

Visualize The Results

The figure below (Figure 2) shows the text generated by our text summarizer tool for a blog URL using LangChain and the Gemma2-9b-It model.

Figure 2: Output generated by the text summarizer

Conclusions

In this article, we developed a simple yet powerful text summarizer using LangChain, Streamlit, and the Groq API. Here are the key takeaways:

-

We developed a Langchain-based text summarizer that seamlessly integrates LLM with external data sources. It handles everything from fetching the content to generating summaries.

-

We developed a user-friendly interface using Streamlit, where a user can input a blog URL and then receive a summary with minimal lines of code.

-

We used Groq API to access the “Gemma2-9b-It” LLM model. The use of such a tool can pick key insights from text, which can save a lot of time for the user.

In this article, we showed how various tools can be used together to develop a text summarizer that can save time and streamline workflows to help customers get straight to the point without the need to sift through tons of content.

This project can be a great starting point for anyone hoping to build their own AI-driven text summarizer tools!

References And Further Readings

Text Summarizer Using OpenAI and LLM

What is Retrieval Augmented Generation?

Ollama: Supercharge Your Chatbots with LangChain Integration

DeepSeek AI Q&A: Build Smarter, Faster, Powerful Apps

Text Summarizer Using Langchain

Frequently Asked Questions

How does this AI-powered text summarizer tool work?

The text summarizer tool can be used to extract content from a given blog URL, process it using a Large Language Model (LLM), and generate a concise summary. We can use LangChain to handle the summarization logic, while Streamlit provides an easy-to-use web interface.

How do Large Language Models (LLMs) help in text summarization?

LLMs analyze text by understanding context, extracting key points, and generating concise summaries. They can process vast amounts of information efficiently, making them ideal for developing text summarizer.

What is LangChain?

LangChain is an open-source framework for building applications by integrating Large Language Models (LLMs) with various data sources. It makes complex workflows, such as text summarization, question answering, and content extraction, much simpler by efficiently managing interactions between LLMs and external data.