Overview

The process of creating a conversational AI application may appear daunting, but what I have seen with modern frameworks like LangChain and pretty neat tools like Streamlit is that it surprisingly becomes simple. All these advanced technologies combined with OllamaLLM give you an amazing interactive chatbot that provides thought-provoking and dynamically changed responses.

This step-by-step guide will walk you through each piece of the process. By the end, you will have a fully-functioning AI-powered assistant ready to converse with users in natural, human-like language.

Introduction to LangChain, Ollama, and Streamlit

Before diving into the code, let’s quickly introduce the three key technologies we’ll be using in this project: LangChain, Ollama, and Streamlit.

LangChain: Bridging the Gap Between LLMs and Applications

LangChain is an open-source framework that makes integrating powerful models like GPT-3, GPT-4, and other large language models into applications easier. The thing that really sets LangChain apart is that it can handle complex workflows, chain together different components, and handle tasks such as memory management, prompt engineering, and external integrations such as databases, APIs, and other systems.

Essentially, it turns the notion of a “smart assistant” into something much more manageable and customizable, something that allows you to easily create applications that can make decisions, answer questions, and respond intelligently.

For more details on LangChain, check out the LangChain Documentation.

Ollama: A Powerful Language Model for AI Applications

Ollama is a state-of-the-art language model that powers the conversational aspect of our AI-powered assistant. In this guide, we are using OllamaLLM with the Gemma model. The Gemma model is particularly noted for its ability to generate human-like text, making it an ideal choice for our use case.

By combining Ollama with LangChain, we ensure that the application can process complex inputs, generate coherent responses, and maintain context—all essential qualities for a helpful AI assistant.

OllamaLLM presents different models, each of which concentrates on various strengths and use cases. The following table outlines a selection of popular models, with parameters and commands by which you can run them. Depending on your needs, different models can be picked to suit the capabilities of your AI assistant. The table below, shows the variosu models that are available in the Ollama.

Table 1: List of various LLM models available in Ollama

| Model | Parameters | Size |

|---|---|---|

| Llama 3.3 | 70B | 43GB |

| Llama 3.2 | 3B | 2.0GB |

| Llama 3.2 (1B) | 1B | 1.3GB |

| Llama 3.2 Vision | 11B | 7.9GB |

| Llama 3.2 Vision (90B) | 90B | 55GB |

| Llama 3.1 | 8B | 4.7GB |

| Llama 3.1 (405B) | 405B | 231GB |

| Phi 4 | 14B | 9.1GB |

| Phi 3 Mini | 3.8B | 2.3GB |

| Gemma 2 (2B) | 2B | 1.6GB |

| Gemma 2 (9B) | 9B | 5.5GB |

| Gemma 2 (27B) | 27B | 16GB |

| Mistral | 7B | 4.1GB |

| Moondream 2 | 1.4B | 829MB |

| Neural Chat | 7B | 4.1GB |

| Starling | 7B | 4.1GB |

| Code Llama | 7B | 3.8GB |

| Llama 2 Uncensored | 7B | 3.8GB |

| LLaVA | 7B | 4.5GB |

| Solar | 10.7B | 6.1GB |

For more information, visit Ollama’s Official Website.

Streamlit: A Simple Yet Powerful Web Framework

A framework for building interactive applications should be easy to use yet powerful enough to handle user inputs. Streamlit is a Python framework that enables developers to create stunning, interactive web applications with just a few lines of code.

With Streamlit, you can create dashboards, data visualizations, and even AI applications quickly. It is so simple and integrates well with LangChain and Ollama libraries in Python, so it’s the perfect choice to be built in a conversational AI assistant.

For further reading, explore the Streamlit Documentation.

Step-by-Step Guide to Building a Conversational AI Assistant

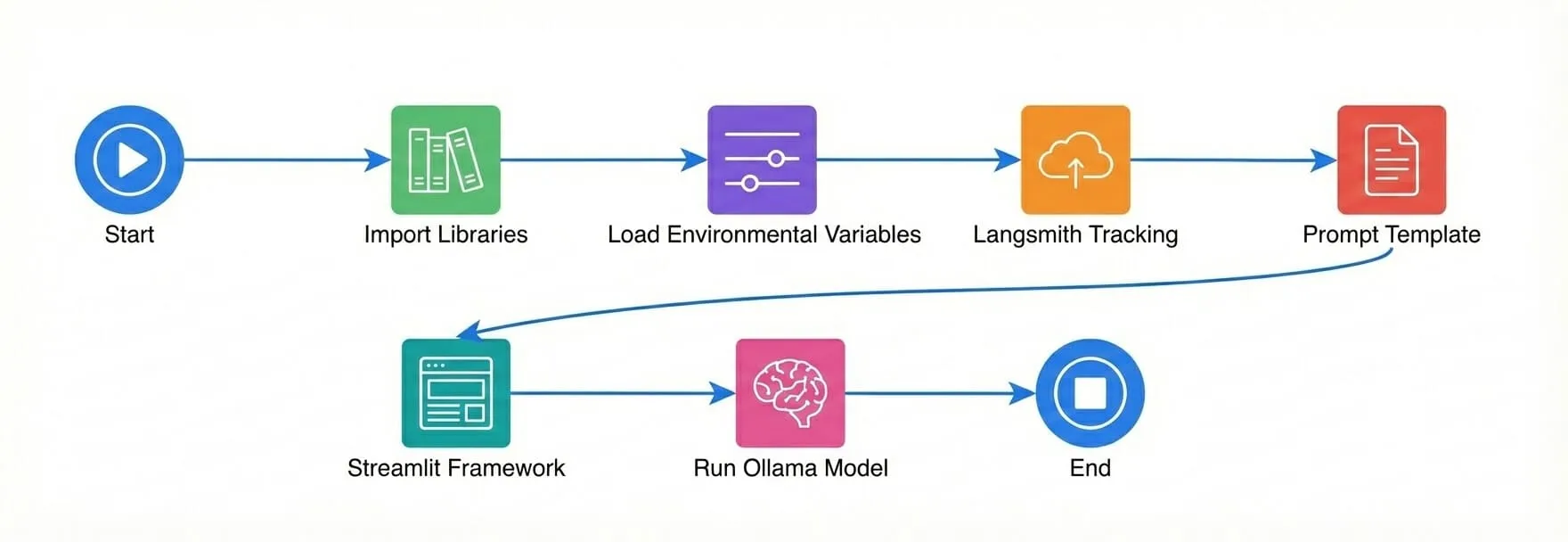

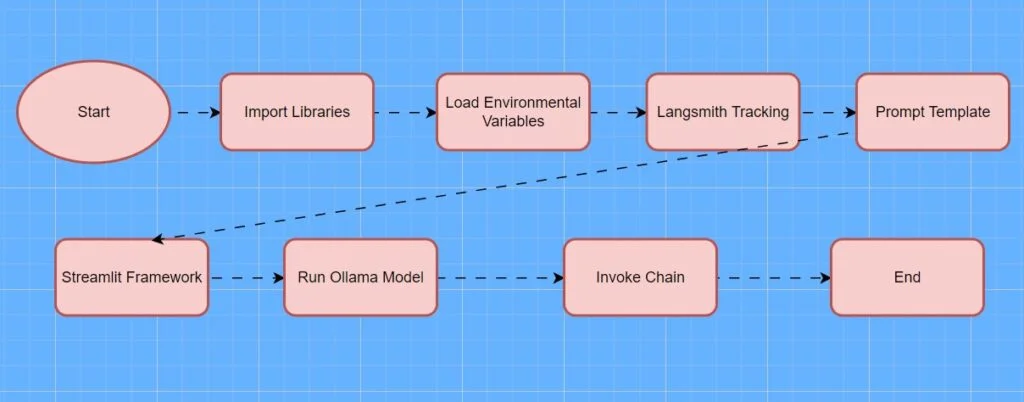

This code below sets up a basic web app with Streamlit and integrates it with the LangChain framework. The goal is to create a chatbot that answers user-submitted questions based on the Ollama language model (here, we will be using the “gemma2:2b” model). The code also manages environment variables and sets up necessary configurations for LangChain tracking. The figure below shows the various steps to develop the model (Figure 1).

Figure 1: Steps to build a chatbot using LangChain, Ollama, and Streamlit

Import Required Libraries

import os

from dotenv import load_dotenv

from langchain_ollama import OllamaLLM

import streamlit as st

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParserExplanation

-

os: Several functions to help with communication with the operating system such as setting environment variables.

-

load_dotenv: A function that belongs to the dotenv library, used to load environment variables from the .env file.

-

OllamaLLM: This is a class from langchain_ollama that is used for communicating with the Ollama language model, presumably an AI model. streamlit as st: Streamlit library is for the development of interactive web applications.

-

ChatPromptTemplate: A part of Langchain meant to create templates for the chatbot interaction, i.e., the structure of the prompts of the chatbot.

-

StrOutputParser: A class from Langchain that takes care of the string formatting of the output of a chat as a response.

Load Environmental Variables

load_dotenv()load_dotenv

The load_dotenv function assigns environment variables from a .env file into the program. Often it is better to save sensitive data, such as API keys, outside of a source code. It enables you to print or keep the given credentials safe.

Langsmith Tracking Configuration

os.environ["LANGCHAIN_API_KEY"]=os.getenv("LANGCHAIN_API_KEY")

os.environ["LANGCHAIN_TRACING_V2"]="true"

os.environ["LANGCHAIN_PROJECT"]=os.getenv("LANGCHAIN_PROJECT")Explanation

-

os.environ[“LANGCHAIN_API_KEY”]: It loads the LANGCHAIN_API_KEY from environment variables and sets it in the program. Usually, this API_KEY is used to access the Langchain services.

-

os.environ[“LANGCHAIN_TRACING_V2”] = “true”: It enables tracing for Langchain. It will monitor and track the execution of the Langchain services.

-

os.environ[“LANGCHAIN_PROJECT”]: It fetches the LangChain project name (from the .env file or env) and sets it.

Define Prompt Template

prompt=ChatPromptTemplate.from_messages(

[

("system","You are a helpful assistant. Please respond to the question asked."),

("user","Question:{question}")

]

)Explanation

-

ChatPromptTemplate.from_messages: This method creates a template for the flow of conversation the chatbot is to follow during the interactions.

-

“system”, “You are a helpful assistant…”): The system message is set beforehand so as to specify the assistant’s role-it’s a guideline for how the assistant should behave.

-

(“user”, “Question:{question}”): This user message will be dynamically replaced with the user’s input (the actual question).

Streamlit Framework (User Interface)

st.title("Langchain Demonstration With Gemma Model")

input_text=st.text_input("What question you have in mind?")Explanation

-

The title of the Streamlit web app is set to “Langchain Demonstration With Gemma Model”: This is the title that will be shown at the top of the webpage.

-

input_text = st.text_input(“What question you have in mind?”): The user typing whatever in the text box. The input is saved to a variable input_text.

Run LLM Model and Process Input

llm = OllamaLLM(model="gemma2:2b")

output_parser=StrOutputParser()

chain=prompt|llm|output_parser

if input_text:

st.write(chain.invoke({"question":input_text}))Explanation

-

llm = OllamaLLM(model=”gemma2:2b”): Initialise the Ollama language model with the specified “gemma2:2b”.

-

output_parser = StrOutputParser(): Initialise an output parser to format the model’s response into a string.

-

chain = prompt | llm | output_parser: Creates a chain that combines the prompt, the language model, and the output parser.

-

if input_text: Checks if the user has sent any input.

-

st.write(chain.invoke({“question”: input_text})): If there is input, feeds input into the chain and displays it in the app.

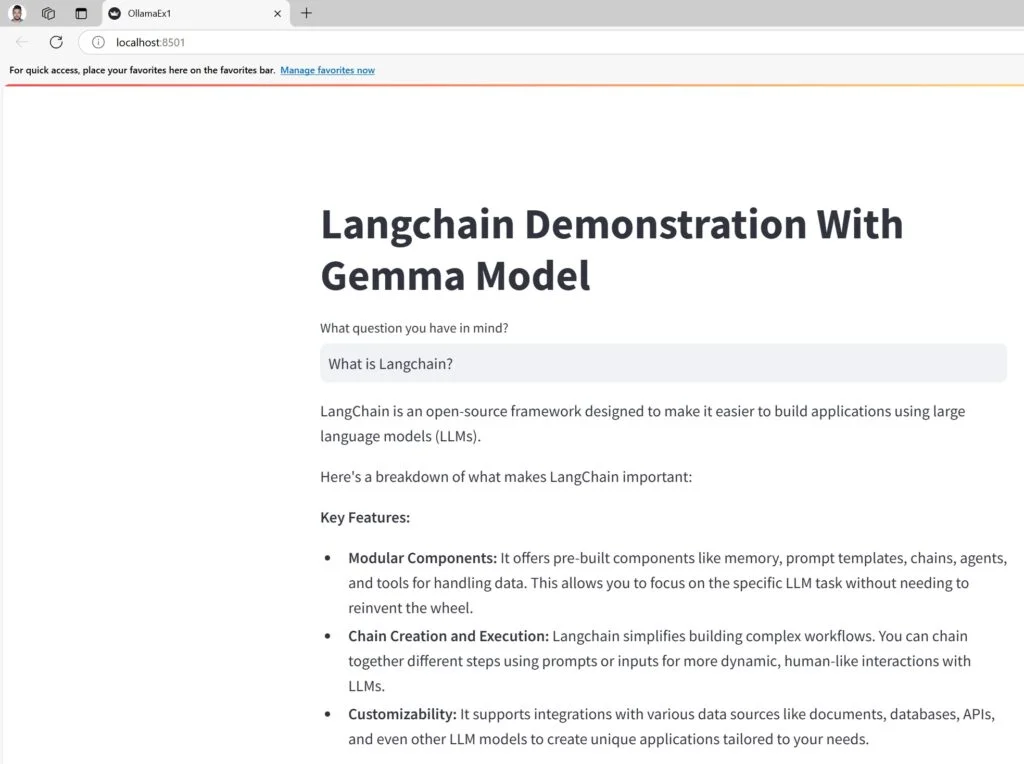

Visualizing The Results

The screenshot offers a web interface built in Streamlit that allows a user to ask their question, to which the application responds using the Gemma model via LangChain and Ollama.

Figure 2: Streamlit app UI showing chatbot response using the Gemma model

Check the source code here: Source Code

Conclusions

The code constructs an interactive chatbot into a web app using Streamlit, with the help of Langchain and the Ollama “gemma2:2b” model for question answering. The web interface is simple and easy for the user to input questions. It processes input questions with a formalized prompt and renders the model’s answers.

With LangChain’s API key and tracing abilities, this setup can be effective for developing interactive, live AI applications.

Frequently Asked Questions

What is LangChain, and why is it important for building AI applications?

LangChain is an open-source framework that simplifies integrating large language models (LLMs) like GPT-3 and GPT-4 into applications. It handles complex workflows, memory management, and external integrations, making it easier to build customizable and intelligent AI assistants.

What makes Streamlit a good choice for building the AI application interface?

Streamlit is a Python framework that allows developers to create interactive web applications quickly with minimal code. It integrates seamlessly with LangChain and Ollama, making it ideal for building conversational AI interfaces like dashboards or chatbots.

Is prior experience in AI or coding required to follow this guide?

While some basic Python knowledge is helpful, the guide is designed to be beginner-friendly. With modern tools like LangChain, Ollama, and Streamlit, even those new to AI can build a functional conversational AI assistant step by step.